Google has announced plans to release a hardware product, dubbed Edge TPU, to bring its TensorFlow-accelerating Tensor Processing Unit (TPU) technology to the Internet of Things.

Originally announced back in May 2016 as an internal project to accelerate the company’s search, translation, and other services, the Tensor Processing Unit is a family of custom-built application-specific integrated circuit (ASICs) designed to accelerate deep-learning algorithms. From its internal-use-only unveiling, the technology has made its way to the Google Cloud platform – and now, for the first time, will be made available in hardware form for third-party developers.

“Edge TPU is Google’s purpose-built ASIC chip designed to run TensorFlow Lite ML models at the edge,” says Injong Rhee, vice president for Internet of Things (IoT) and Google Cloud at the advertising giant. “When designing Edge TPU, we were hyperfocused on optimising for ‘performance per watt’ and ‘performance per dollar’ within a small footprint. Edge TPUs are designed to complement our Cloud TPU offering, so you can accelerate ML training in the cloud, then have lightning-fast ML inference at the edge. Your sensors become more than data collectors – they make local, real-time, intelligent decisions.”

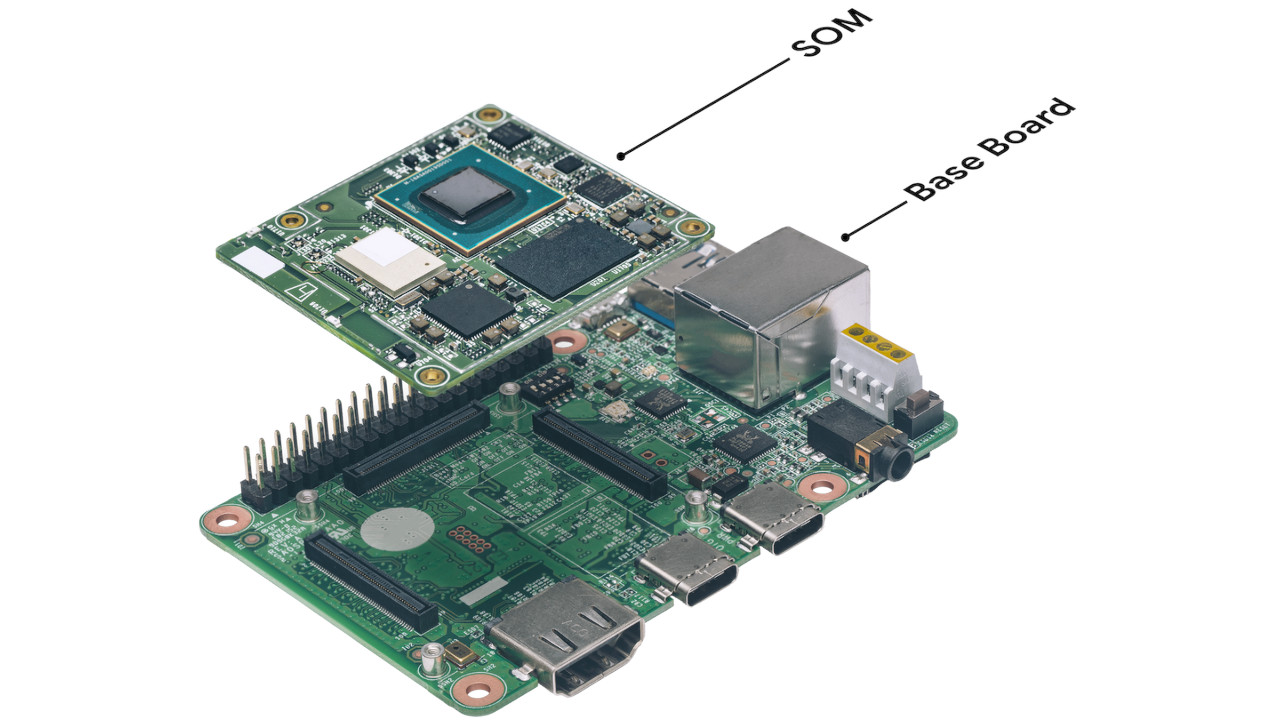

Google has confirmed partnerships with a range of companies to integrate Edge TPU hardware into their products, while also promising to release a system-on-module (SOM) development board, similar in layout to the popular Raspberry Pi, for developers interested in experimenting with the acceleration hardware.

More information is available from Injong’s announcement post and the product page, where developers can request early access to the Edge TPU hardware itself.