Arm’s Robert Elliot and Mark O’Conner have published a white paper on the company’s Arm NN machine learning platform and its optimisations for use on low-power embedded devices.

“We expect machine learning to become a natural part of programming environments, with tiny embedded neural networks being part of program execution,” the pair explain of the inspiration behind Arm NN. “To prepare for this, we’ve developed a low-overhead inference engine with the ability to import a file produced by a handful of machine learning frameworks. This supports a ‘write once, deploy many’ approach to development, with the same framework able to target the Cortex-A class cores used in high-end mobile as well as the Cortex-M class cores used in processing environments with very small memories. We’ve spent significant effort to make sure that good performance is achieved on all of these processors

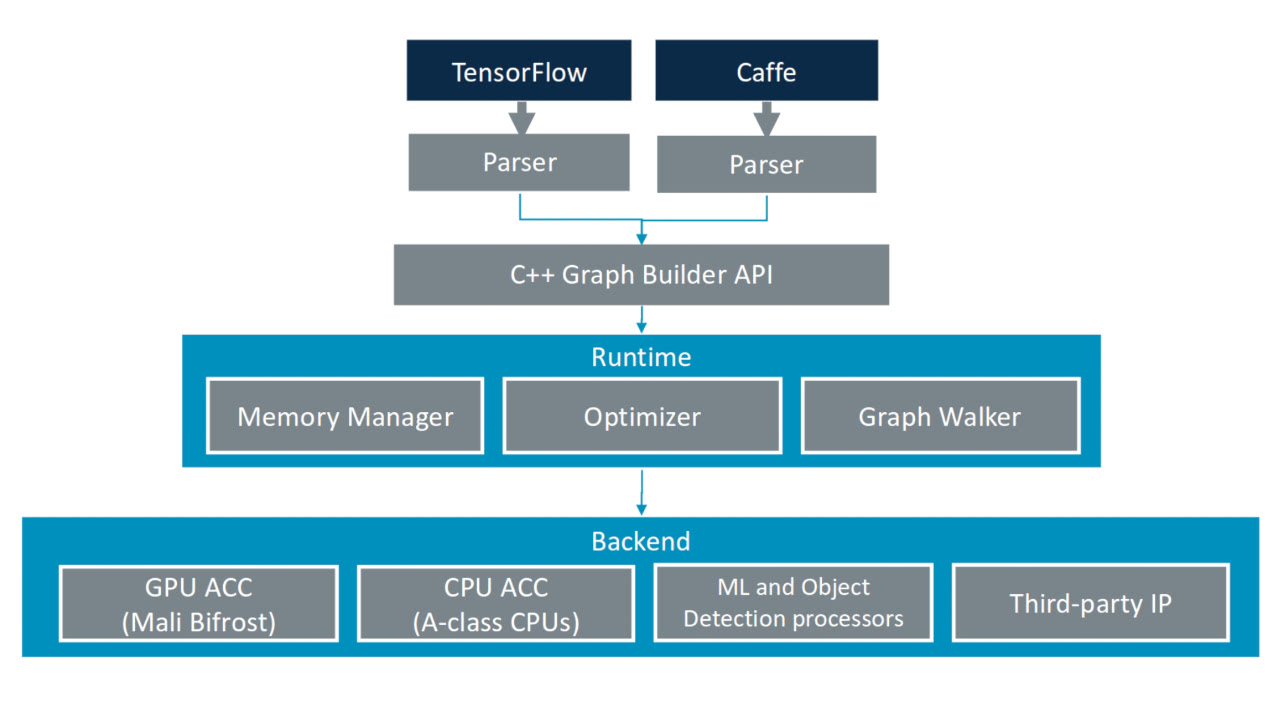

“Arm NN bridges the gap between existing frameworks and the underlying Arm IP. It enables efficient translation of existing neural network frameworks, including TensorFlow and Caffe, allowing them to run efficiently, without modification, across Arm processing platforms. The inference engine can be distributed to different devices while taking advantage of the key optimizations of each.”

In the paper’s practical example, Robert and Mark demonstrate the importation of a TensorFlow file and its subsequent optimisation for embedded devices, while promising that ongoing optimisations will allow for seamless cloud-to-edge deployment, improved heterogeneous scheduling, and better compiler tools which simplify arithmetic sequences and reduce memory access and bandwidth requirements.

The paper is available following free registration on the official Arm website.